MIT CSAIL’s Innovative Large Language Models

Large language models (LLMs) are becoming an integral tool in programming and robotics. Still, a significant gap persists when it comes to complex reasoning tasks. Such systems lag behind humans due to their inability to learn new concepts as we do. Good abstractions, high-level representations of complex concepts, remain a challenge, causing a hindrance in performing sophisticated tasks.

However, the researchers at the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) have made a breakthrough. They have identified a treasure trove of abstractions within natural language. Their findings, to be presented through three papers at the International Conference on Learning Representations, suggest that everyday language can provide a rich context for language models, enabling them to build more impactful, overarching representations.

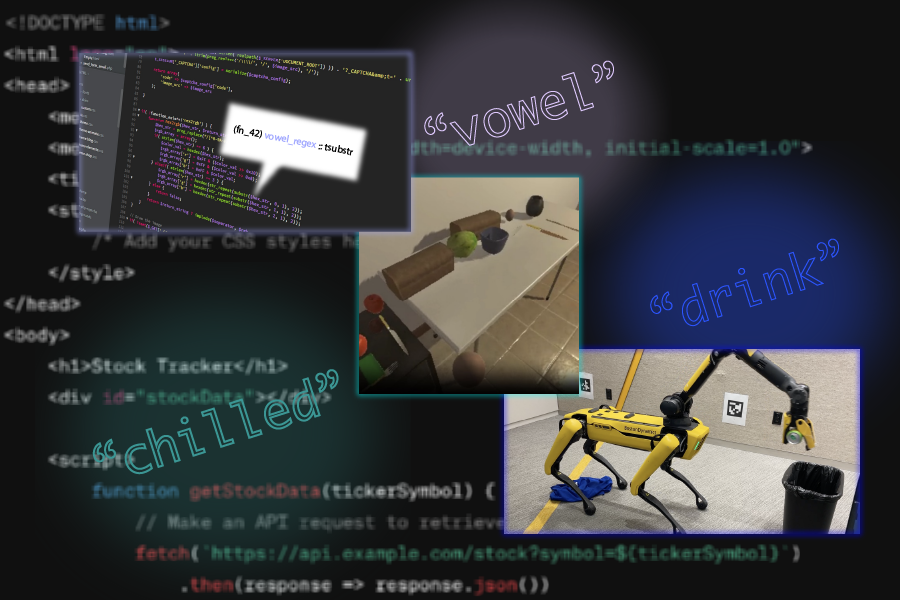

These models have various applications, from code synthesis and AI planning to robotic navigation and manipulation. The three sets of frameworks, namely theLILO, Ada, and LGA, are prime examples. They facilitate the creation of abstraction libraries dedicated to their specific tasks.

LILO: Pioneering Neurosymbolic Framework

The large language models facilitate writing solutions to small scale coding functions. However, creating entire software libraries, similar to the ones devised by human engineers, remains out of reach. To advance the capabilities of AI models, they need to learn refactoring, consolidating, and simplifying code into readable and reusable program libraries.

MIT researchers merged the algorithmic refactoring methods with LLMs, as the Stitch algorithm. The collaboration birthed the neurosymbolic method LILO, which uses a standard LLM to write code, pairs it with Stitch to discover abstractions, and documents them comprehensively in a library.

LILO’s distinct focus on natural language allows it to achieve tasks that necessitate human-like common sense knowledge. From identifying and removing vowels in a code string to drawing a snowflake, LILO outperforms standalone LLMs and previous library learning algorithm from MIT called DreamCoder. This suggests LILO’s potential for future software development functions.

Ada: Natural Language-Based AI Task Planning

In resemblance to programming, AI models automating multi-step tasks struggle in the absence of abstractions. Here, the CSAIL-led “Ada” framework steps in. Named after the renowned mathematician Ada Lovelace, Ada develops libraries of useful plans for virtual kitchen chores.

The Ada method trains on potential tasks and their natural language descriptions, which helps a language model propose action abstractions from this dataset. A human operator scores and filters the best plans into a library for the AI to construct hierarchical plans for various tasks.

When the researchers incorporated the widely-used large language model GPT-4 into Ada, the system significantly improved task accuracy in a kitchen simulator and Mini Minecraft. The researchers anticipate further generalization of this work, envisioning its application in homes.

Language-guided abstraction: Facilitating Robotic Tasks

Yet another technique in this series designed to improve environmental interpretation by AI is the language-guided abstraction (LGA). The LGA method focuses on the contextual relevancy of natural language to identify essential elements in performing a designated task.

Remarkable about LGA is its ability to guide language models in producing environmental abstractions similar to those of a human operator, but in lesser time. The developers are now considering incorporation of multimodal visualization to enhance environmental representation in their work.

Mitigating AI’s Deficits

Assistant professor at the University of Wisconsin-Madison Robert Hawkins suggests how library learning is one of the most “exciting frontiers in artificial intelligence”. Nonetheless, he emphasized the indispensability of “compositional abstractions” and applauded these papers for demonstrating a plausible solution.

Indeed, language models are expressive in high-quality codes, explaining why the neurosymbolic methods make it easier for language models to solve more complex problems. This indicates the bright future of human-like AI models. The researchers at MIT CSAIL were significant contributors to the three papers, their works effectively revolutionizing our understanding of AI and programming.

These frameworks exhibit the power of AI as it evolves to facilitate complex tasks, learning from natural language, and boosting efficiency. It isn’t beyond rhetoric to anticipate considerable leaps in AI sophistication as researchers continue to explore this “exciting frontier”.