Introduction: Understanding AI Models

In the past, building a text generation model from scratch required extensive training over several months. However, the AI landscape has evolved significantly. Today, we have pre-trained or base GPT models that excel at various language-related tasks, such as ChatGPT.

GPT (Generative Pre-trained Transformer) models are pre-trained with information from the entire web, which is excellent for general-purpose chatbots like ChatGPT. These models do a good job of answering questions when the answer is somehow implicit in the training dataset. Essentially, they have learned from vast portions of internet content up until 2021.

The problem arises when the model (designed to generate content) doesn’t know the answer it needs to generate. This is when we encounter so-called “hallucinations.” It occurs with highly specific industry or company information. The model invents an answer because its primary function is content generation, not providing accurate information.

To avoid hallucinations and to help the base model find correct answers to factual questions, base models serve as a solid foundation for further improvement through processes such as fine-tuning, empeddings, prompt engineering, etc.

The Role of Fine-Tuning

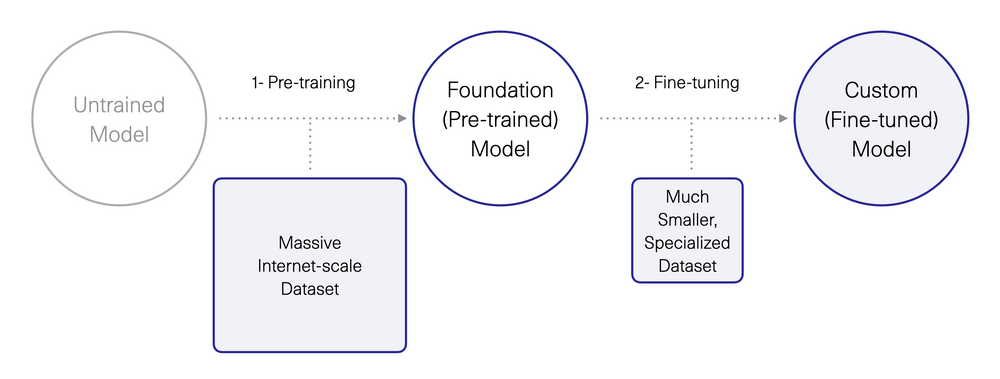

The key concepts in understanding AI models are the “Foundation/Base Model” and “Fine-tuned model.” With a pre-trained foundation model, it is possible to enhance its capabilities by fine-tuning it on a smaller dataset, refining its performance for a specific task.

Fine-tuning involves uploading a set of examples comprising prompts and completions to enhance the GPT-3 base model for specific use cases. It’s a common approach, updating the weights of a pre-trained model by training on a supervised dataset tailored to the desired task, typically utilizing thousands to hundreds of thousands of labeled examples. This method offers strong performance across various benchmarks.

Economic Value of Fine-Tuning

Fine-tuning holds economic value as it allows businesses to build proprietary custom models, even if the original model was publicly accessible or open source. By leveraging existing foundation models and tailoring them to specific requirements, companies can gain a competitive advantage.

One popular solution to this problem has been fine-tuning, which involves updating the weights or configuration of the base GPT model. But, as OpenAI puts it, the recommended solution for answering technical questions, news, etc., is using “embeddings,” for which they have launched a specific API.

This wayt, fine-tuning would correspond to necessary training to customize more general aspects, such as communication style or vocabulary usage.

At this point, OpenAI recommends using fine-tuning for more general tuning, such as language style and keywords. And use embeddings for company- or industry-specific knowledge. This implies 3 layers of models: pre-trained, fine-tuned, and embeddings.

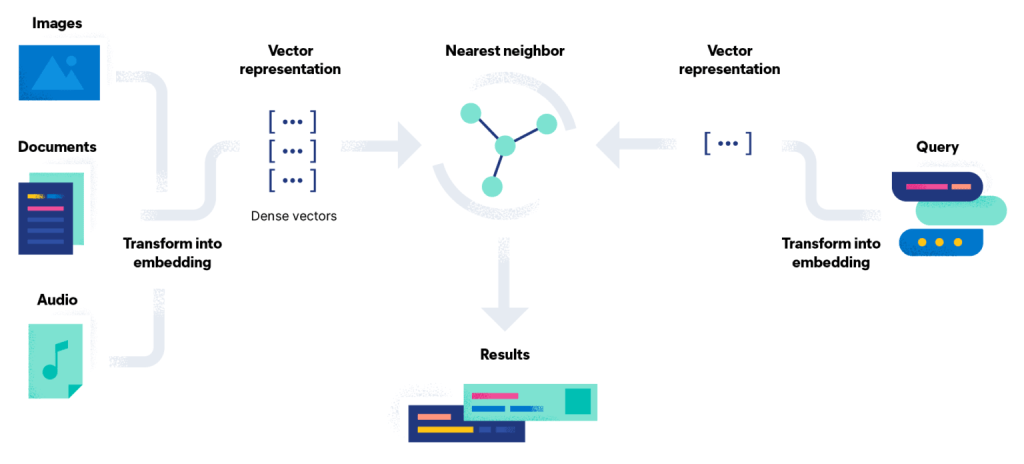

Pic: Elastic

Embeddings: A New Layer of Information

For those operating at the application layer, incorporating fine-tuned custom models from the model layer can be a differentiating factor. This can be achieved easily by utilizing managed language model providers that simplify the fine-tuning process. Such providers enable convenient experimentation with numerous custom models, paving the way for innovation and accelerated progress.

With the Embeddings API, we provide a separate document containing relevant information, then find sections in that document similar to the user’s query and create a new prompt with this information to answer the question.

There are multiple use cases for embeddings, including customer service AI Assistants providing answers to frequently asked questions, programming bots that answer developer questions, legal question-answering bots providing accurate responses to legal queries, medical question-answering bots addressing patient inquiries, addressing questions about key points discussed in a meeting, and more.

Embeddings serve as an additional layer of information that we add to the model to handle any specific type of query.

Prompt Engineering

Prompt engineering refers to the strategic crafting of prompts or input data given to language models like GPT-3 to elicit desired responses. It involves tailoring the format, content, and structure of the prompt to optimize model performance for specific tasks or objectives.

Prompt engineering plays a crucial role in maximizing the effectiveness of fine-tuning training and embeddings. By carefully designing prompts, researchers and practitioners can guide the model to generate accurate and relevant outputs, thereby enhancing overall performance across various use cases and benchmarks.

Effective prompt engineering ensures that the model receives the necessary information to understand the task at hand and generate appropriate responses, ultimately improving the efficiency and efficacy of natural language processing applications.

Zero-shot and Few-shot training

Few-shot learning entails providing the model with a small number of examples, often just a few, during inference, integrated into the prompt. GPT-3 then extrapolates to new, unseen examples. Similarly, one-shot learning involves presenting only one example to the base model at inference.

Zero-shot learning involves task assignment without any training examples, solely relying on a natural language prompt. It’s akin to one-shot learning but lacks demonstrations, relying solely on a natural language instruction describing the task.