Turning Words into Numbers

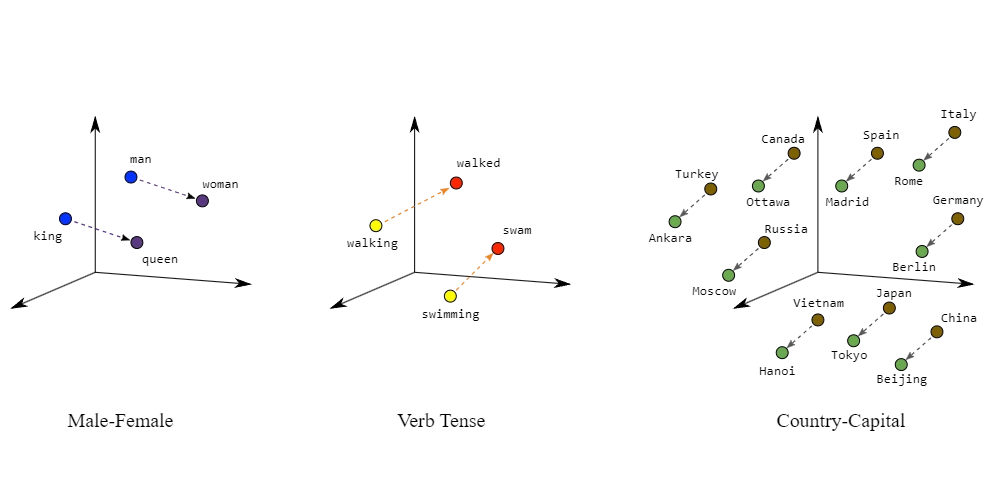

The process of vectorisation, or word embeddings, is used to represent text or other data in numerical form for artificial intelligence (AI) models. Word embeddings are a popular way of representing words as vectors of numbers in the context of Natural Language Processing (NLP).

The key idea behind vectorisation is that similar words should have similar numerical representations. In a vector space, words with similar meanings or appearing in similar contexts will have closer vectors.

This turns a semantic problem into a geometric one, making it easier for machines to handle. AI can detect the relationships and nuances between words, aiding in the comprehension and generation of human-like text.

Embeddings is Simpler than Fine-tuning

Additionally, the embedding process is simpler than fine-tuning a linguistic model. Instead of fine-tuning, consider using a pre-trained model like GPT3 to generate text vectors that capture semantic meaning for more accurate predictions.

Platforms such as Pinecone allow vectors to be created and used. And by combining GPT models with business intelligence, an AI Assistant can be generated to run a chatbot.

The Process of Vector Embeddings

To train a custom AI Assistant, we always follow a similar process:

- Gathering information about your business. This can include information about your products, vision, mission and any other relevant details.

- Converting the collected information into numerical vectors. This involves using a pre-trained language model to encode your text into high-dimensional vectors that capture the meaning of the text.

- The vectors are stored in a vector database. This is the index.

- And now we can run queries that have also previously been converted into vectors. The engine will then check the vector similarity between the query and each document in the index and deliver the most similar results.

- The AI Assistant can rely on a personalised source of knowledge to produce content or answers in real-time.

- Finally, we test our AI Assistant with sample questions and answers to ensure it generates accurate and useful responses. And we refine the model to improve performance and accuracy.

The assistant is now ready to go live. Depending on the size and complexity of the information, it may be beneficial to monitor and improve its performance over time with the help of human “AI Trainers”.

Helping your AI Assistant Tell the Truth

At a deeper level, embeddings are an intrinsic part of the way GPT models are trained and work. But for the purpose of this post, we can conclude that the idea behind them is this:

- Create a library of pertinent information about your business.

- Use a model like ChatGPT to consult this library whenever it receives a relevant question, instead of relying on a more general pre-training library.

In this way, we prevent your AI Assistant from hallucinating and get it to give correct, factual answers.

Image source: Google Developers.